When the home wireless starts looking like it needs a refresh, my go-to has historically been an enterprise grade wireless system.

I've been reading the reviews of the Ubiquiti product line (Ubiquiti UAP-AC-LR) and decided it was time to give them a try. If for no other reason than many people reported that it was A) difficult to set up and B) used by WLAN engineers and C) cost (how they do it for this price is pretty cool).

A) Not true

B) Ok. I believe that anyone that can install a java server app could probably do the "home" level configuration work.

C) Yeah, $200, not $2000

I chose 2 of the LR model. Coverage of my home with N required 2, so these most likely to require 2. BTW, WLAN site survey, should probably be done. I did one for my home for N, so pretty much knew what the freq coverage pattern needed to look like.

Received them 2 days after ordering, when Amazon Prime works, it really works.

Unboxing:

|

| Ubiquiti UAP-AC-LR |

2 manuals, may open them one day.

A sub ceiling drill-through mounting bracket with screws. Won't be using that.

A PoE injector with power cable. Nice addition if you don't have a PoE switch.

Access Point with mounting bracket.

Controller Installation:

https://www.ubnt.com/download/unifi -> Unifi controller for Windows (going to run it on my media server)

There is also a user guide on this page for the controller.

Java needs to be installed on the computer.

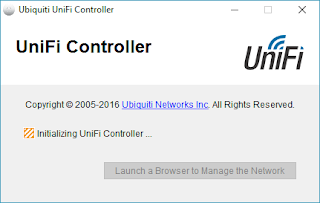

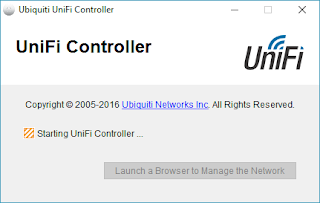

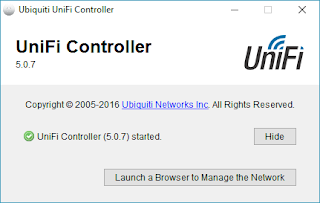

Once installed, this dialog pops up. It's a direct link to https://localhost:8443

Click "Launch a Browser…"

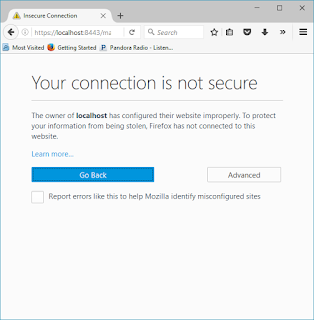

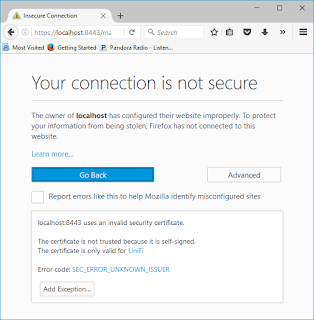

You may see this: Click "Advanced"

Click "Add Exception…"

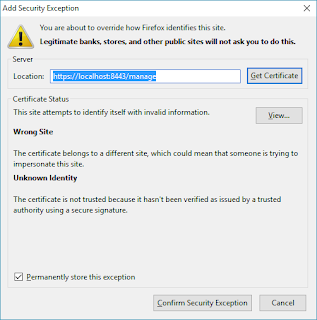

Click "Confirm…"

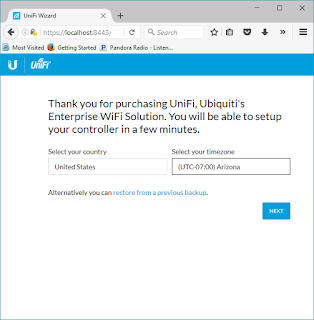

Verify your Country and Timezone

Note: This is where it gets interesting. If you have a single VLAN home network, no issues. If you have multiple VLANs, make sure the AP and the device the server are running on are in the same VLAN.

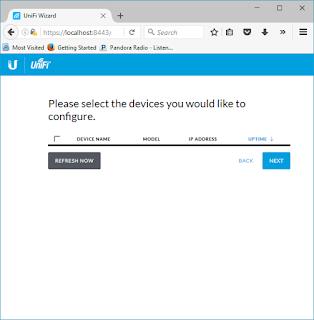

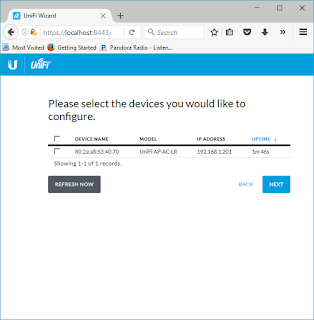

The AP light will turn white. Select "Refresh Now"

Select the checkbox next to the AP and select "Next"

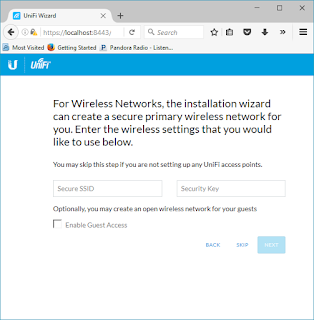

Put in the SSID and Wireless Key you want to use.

Select "Next"

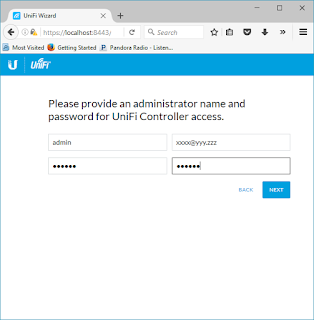

Put in the administrative information. Select "Next"

Then select "Finish"

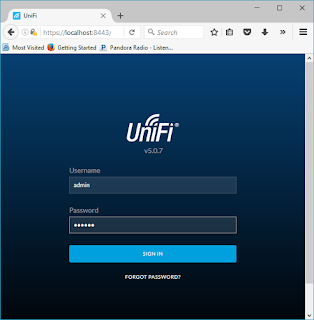

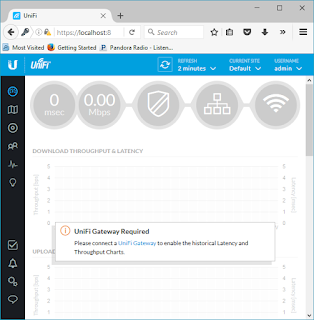

The installation now takes you to the Unifi controller

Sign in with the administrative name and password you set earlier

Select Devices Icon - third icon in the black menu on the left

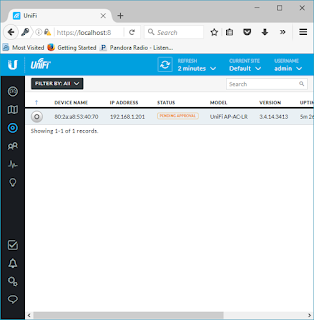

The AP(s) will auto-magically discover if it is connected.

Notice "Pending Approval" in the Status.

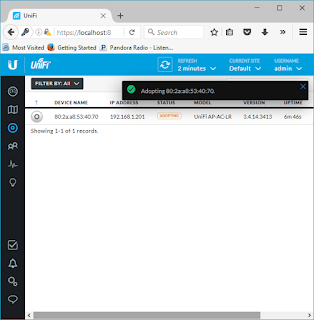

Scroll to the right of the window and select "Adopt" under actions menu

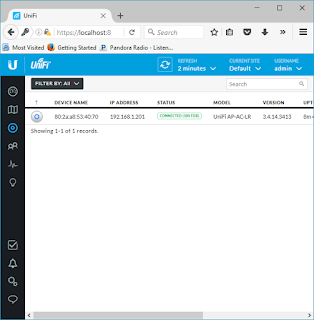

It is now "CONNECTED" and the SSID is broadcasting. The light on the AP switched to a Blue hue.

Feel free to use the user guide to customize your wireless.

AP Installed:

Used two of the expansion anchors in the screws pack.

Cute. Lots better than the 6 legged black spider I had up there before. Wife likes the looks of it a LOT better.

Entire Installation: About 1.5 hr. It took longer to get the old AP off the ceiling than it did to configure the software and install the APs. The locking mounting bracket of the old AP gave me so much trouble.

Yeah, I need to patch the hole where the anchor pulled out from the last AP.

Final thoughts after using it for a couple of days.

If you are in a relatively small living space, use the wireless on your router. I'm not sure you'd get much more out of this solution.

If you are living in a larger space, that may require 2 or more APs for coverage, consider this AP. Also, consider the single channel roaming configuration if you want to be really cutting edge.

It's provided really good performance at distance. More than I expected for approximately $200 and as good as I was getting out of a major enterprise brand Controller plus WAPs of the 802.11n generation. Also, "way better" than 3 of the current top of the line SOHO brand 802.11ac routers I have.

I won't be taking them down anytime soon. Hope I get the same 6 years of use out of these that I did with the previous APs. Even if I didn't, I could replace them 10 times over and it wouldn't cost more than the previous installation.

Recommendation: HIGH