A doozy of a quote from @swardley

Thursday, October 24, 2019

Friday, October 18, 2019

Thursday, September 26, 2019

Orientation and the sad face

Without orientation, strategy will make little sense.

Consider the scenario where it is vital that you get to a destination. You’ll need a map. Orientation of the map will potentially change the meaning, or the perceived meaning, of the directions you’ll move. The tried and true method of orienting a map is to identify correct orientation of the map, utilizing the “compass rose” or other cardinal direction indicator.

Consider the scenario where it is vital that you get to a destination. You’ll need a map. Orientation of the map will potentially change the meaning, or the perceived meaning, of the directions you’ll move. The tried and true method of orienting a map is to identify correct orientation of the map, utilizing the “compass rose” or other cardinal direction indicator.

|

By Abraham Cresques - This image comes from Gallica Digital

Library and is available under the digital ID btv1b55002481n, Public Domain, https://commons.wikimedia.org/w/index.php?curid=15582034

|

If you’re using a GPS, that’s all well and good. GPS has orientation built-in*. If you’re using verbal directions, the directions are as important as the starting point, but I digress…

Without Orientation, there is no situational awareness and the strategy is specious at best. Furthermore, motion is not well defined. Directions are difficult (kind of like verbal directions above).

Consider the OODA that Simon Wardley created to describe the two types of why and you’ll immediately discover orientation.

|

By @swardley, Wardley Maps, https://medium.com/wardleymaps/on-being-lost-2ef5f05eb1ec

|

In the simplest of measures, you conduct yourself around this strategy cycle by gut feeling and often without even knowing about it. There’s nothing wrong with this, it’s actually how people operate and will continue to until our #AIOverlords take over.

It is far more problematic when the actions affect other people.

What happens in business, possibly more than we care to admit, is the ‘Gut feeling’ becomes the action of purpose. As described by Powermaps.net (edited), the ‘why of movement’ is eliminated from the decision process.

|

History and life have taught us that this method works individually, but when it’s applied to a group action, the consequences are roughly as successful as reading tea leaves. This because decisions that are ‘Gut feelings’ are based objectively on purpose.

For anything larger than an individual, this causes disorientation. Leadership should position the business and the people for movement, expecting some result. What they end up doing with gut feeling is expressing the purpose and then wonder why the action was not well executed.

It takes planning, understanding the climate (which includes things like financial, business, technological, etc). It takes planning, understanding WHAT movement causes WHAT result. And that "WHAT result" is so very important in determining your effectiveness...

On twitter, I jokingly said that if Simon had drawn this OODA slightly differently, the effect would have exemplified the traumatic result of gut feeling on strategy, as a cute emoticon that would have further exemplified the poor results of the ‘gut feel’ action. Here’s what I was thinking….

* https://twitter.com/JeffGrigg1/status/1177551001261359104, brings up the lack of software attributes in simple gps vs integrated navigation software and the execution of α in modern systems. (edited)

Thursday, September 5, 2019

The IT Toolbox #007 - Definitions #3 - drink the SHIFDX

Drinking the sugar high inducing flavored drink mix.

Because of ™ and © we should probably call it SHIFDX.

It is the tendency by people to indulge in exuberance, especially when it comes to marketing information.

In general, it's an important part of the technology industry, because it gets the word out about new technologies.

In specific, it leads to very poor business decision making.

Consider every decision made in the early hype of a technology and where that technology fits in the solution stack today.

Odds are:

A) it made its way along an evolutionary path and the hype died off - we actually figured out what it was really good for

B) it isn't what the marketing perception projected - it does something in the realm of what marketing said

C) it is being impacted by a new technology/integration/abstraction - someone figured out an enhancement or better way of making/doing

D) it is either WAY cheaper or WAY more expensive - yeah, go figure you have to experience it to actually understand what it cost

E) except for the companies that created the product, the market changing benefit has ... changed

My suggestion, if you're going to "drink the SHIFDX", do it in moderation and make sure you hand your keys to someone until you're sure you can drive again.

Because of ™ and © we should probably call it SHIFDX.

It is the tendency by people to indulge in exuberance, especially when it comes to marketing information.

In general, it's an important part of the technology industry, because it gets the word out about new technologies.

In specific, it leads to very poor business decision making.

Consider every decision made in the early hype of a technology and where that technology fits in the solution stack today.

Odds are:

A) it made its way along an evolutionary path and the hype died off - we actually figured out what it was really good for

B) it isn't what the marketing perception projected - it does something in the realm of what marketing said

C) it is being impacted by a new technology/integration/abstraction - someone figured out an enhancement or better way of making/doing

D) it is either WAY cheaper or WAY more expensive - yeah, go figure you have to experience it to actually understand what it cost

E) except for the companies that created the product, the market changing benefit has ... changed

My suggestion, if you're going to "drink the SHIFDX", do it in moderation and make sure you hand your keys to someone until you're sure you can drive again.

Thursday, August 29, 2019

The IT Toolbox #006 - Multi-Cloud Strategy

A multi-cloud strategy is half-way between two types of thinking.

It's also not thought of as an optimal technical solution for a business problem.

But, it's the only way for late majority users to adopt the new technology lifecycle. It's also the elephant in the room.

Let's start with Roger' bell curve:

It's also not thought of as an optimal technical solution for a business problem.

But, it's the only way for late majority users to adopt the new technology lifecycle. It's also the elephant in the room.

Let's start with Roger' bell curve:

Figure 1. Roger' bell curve. credit:Wikipedia.org

Conceptually, the businesses that are going to use a public cloud strategy effectively are the ones that are arguably already using it. They were the innovators, AWS, Google, Azure, etc. What's interesting, in the case of the first two, the lifecycle that lead to public cloud was an arbitrage of their excess capacity. A way to wring out more value from already in place infrastructure.

The "earlies" saw this as a starting point for their needs. Through consumption of a defined model of delivery, their use cases fit the new lifecycle model. At any relative scale, public cloud was a way to reduce the business decision of build vs consume. Netflix is an incredibly good example of an "early", using the new lifecycle model literally made it possible for them to concentrate their effort more specifically on developing their product.

The "earlies" saw this as a starting point for their needs. Through consumption of a defined model of delivery, their use cases fit the new lifecycle model. At any relative scale, public cloud was a way to reduce the business decision of build vs consume. Netflix is an incredibly good example of an "early", using the new lifecycle model literally made it possible for them to concentrate their effort more specifically on developing their product.

The late majority has a different business problem than the innovators and the "earlies."

They desperately want to be able to take advantage of the advances they perceive in the technology change:

They desperately want to be able to take advantage of the advances they perceive in the technology change:

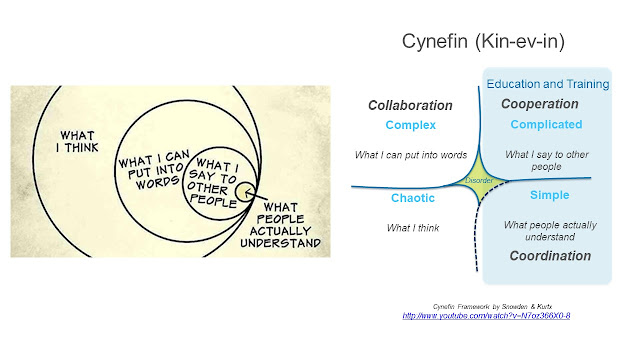

Figure 2. Enterprise Virtualization vs Public Cloud - Link

I've previously described the state of advancement of enterprise virtualization vs @swardley 's Public Cloud map using his technique (above).

The difference under the Wardley mapped model though, is that the Use Case doesn't align cleanly.

It is also why there's a contentious argument in the minds and words of business owners.

During the peak of expectations, we hear statements like this:

"We're moving our workloads to public cloud."

"We'll be 100% public cloud in 2 years."

In despair, the story changes.

"Public cloud is too expensive."

"Public cloud is not secure."

This is also why Workload (or Cloud) Repatriation occurs.

With enlightenment, the story changes, yet again.

"We're implementing with hybrid cloud, so we can take advantage of new technologies and techniques."

Today, anyone that says they are moving from legacy to hybrid cloud is perceived as a laggard.

Today, anyone that says they are moving from legacy to hybrid cloud is perceived as a laggard.

Consider this:

When a business from the "earlies" in public cloud moves to hybrid, it is a conscious business decision. It's thought of as forward thinking.

Consider the infrastructure necessary to deliver Netflix today. It's not pure public cloud. In order to meet the content delivery goals with their customers, they built a Content Delivery Network (CDN), integrated with their platform. It is hybrid.

When the public cloud innovators and the "earlies" start to invest in on-prem workload execution, it is hybrid.

Multi-Cloud Strategy is meeting in the middle ground.

It is a legitimate tool in the #ITToolbox.

The immediate future is hybrid.

It is a legitimate tool in the #ITToolbox.

The immediate future is hybrid.

Monday, August 12, 2019

IoT - Wardley Maps WAR strategy

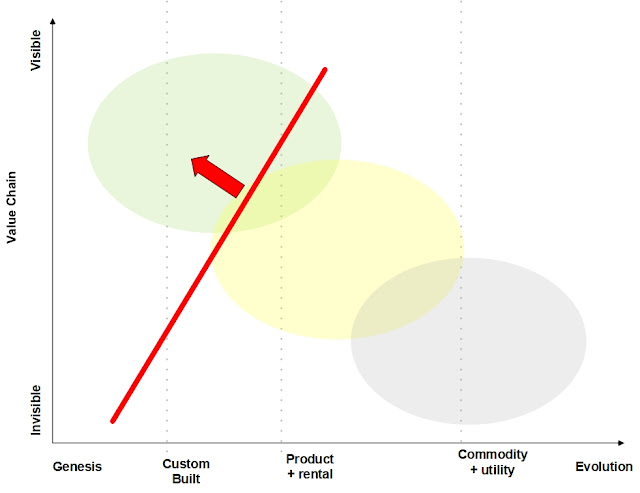

This conversation starts from Chapter 9, of WardleyMaps where it might be useful to use a map to look at the influences of predictability on a specific topic.

The next weak signal leading to predictability from Figure 106, future points of war, is what I'm looking at for Internet of Things (IOT).

I'm using a map that I've been toying with for a while. The concept is relatively straight forward, what actions will lead to the industrialization of IoT?

We'll first start with what the map looks like for one aspect of IoT, I'm choosing data presented of some value to the user.

|

| Figure 1. IoT User Visualization - Wardley Map |

Therefore, if you're building IoT capability, the areas in the upper left are the areas you're going to want to invest in. A simple representation of this idea on a Wardley Map looks like Figure 2.

|

| Figure 2. High, Moderate and Low Value on a Wardley Map |

|

| Figure 3. Methods overlay on a Wardley Map |

|

| Figure 4. Slow attack areas on a Wardley Map |

|

| Figure 5. Fast attack areas on a Wardley Map |

|

| Figure 6. Iot WAR on Wardley Map |

Tuesday, July 16, 2019

The IT Toolbox #005 - Thoughts on Cybersecurity

Define a set of cybersecurity rules.

Define an architecture (be it physical, platform and/or application).

Make sure the aforementioned rules can be applied. (It doesn't matter if they are perfect, NONE are.)

Fix the rules or what the rules break.

For the love of all that is holy, PATCH in a reasonable amount of time. (If you use a service provider, make it a contractual obligation and/or a Key Performance Indicator (KPI).)

Make sure there is a mechanism to verify the patches are in place.

Make sure there is a mechanism to verify FW rules are CORRECT.

Segment ALL applications. Microsegment all unique elements of all applications. Use SSL.

PATCH everything in a reasonable amount of time (yes, it's a repeat, but many don't hear it the first time).

Be prepared to burn down ANY exposure. Have a plan in place in the event this must happen.

Have a reporting and notification plan in place.

When an exposure is identified (and it will be) make sure you use the reporting and notification plan.

If you EVER have to break ANY of the self imposed Cyberscurity rules, segregate and enclave to limit exposure.

Tuesday, July 9, 2019

The IT Toolbox #004 - Definitions #2

AI is not AI

AI is a marketing buzz word (ok it's an acronym (or even an Initialism), but bear with me)

Definitions of AI I've personally witnessed

AI is stealing jobs (well, boring, repetitive, mind-numbing jobs)

AI is Machine Learning (it can/could be, but mostly isn't...yet)

AI is data processing (so is paper shuffling)

AI is a Virtual Agent (actually partially true, at least the native language parts)

AI is The Terminator (nope, that's a Movie, starring Arnold Schwarzenegger and on my must watch list)

AI is Analytics (sorry, Analytics is Analytics, representations and uses of data)

AI is a self driving car (hopeful a self driving car is more than AI, wishing for seats, engine, etc)

AI is Deep Learning (might be true, how supervised is the learning?)

AI is self service (well, ok. Gives supermarket self checkout a new meaning.)

AI is Robotic Process Automation (not so much as a set of response triggers, but bits could be)

If you’d like to read a thoughtful description of Artificial Intelligence, have a look at this Wikipedia article: https://en.wikipedia.org/wiki/Artificial_intelligence (15 pages and 375 references, AND 18 disambiguation references)

So, if you're talking about Artificial Intelligence (AI), consider refining your definition and talking points to if there is (or is not) a neural network being trained in support of the mymicing of "cognitive" functions.

If not, call it what it really is rather what someone is marketing.

Wednesday, July 3, 2019

The IT Toolbox #003 - It is only a little off

IT is complicated ...

Here are some sensors:

Primary Adoption Strategy of Digital Transformation 1

(surveyed IT departments)

34% - heterogeneous IT integration (basically picking parts that work for a particular purpose)

27% - entirely public cloud

24% - entirely private cloud

16% - hybrid cloud

Less than half of enterprises (surveyed) have a mature cloud adoption strategy

12% - self report as mature

37% - self report as somewhat mature

84% 2 of public cloud customers will repatriate some workloads to private infrastructure in 2019

Between 40% and 80% of enterprises will fail to deliver traditional workload on public cloud 3,1

Enterprise Data Centers are closing - incorrect 4

Enterprise Data Center spending continues 5,6

x.86 Market growing at 19% 7

Major x.86 vendors are growing market share and revenue 8

There's a massive misunderstanding about the definition of the Digital Transformation end state. 9

-- Publically available references --

Here are some sensors:

Primary Adoption Strategy of Digital Transformation 1

(surveyed IT departments)

34% - heterogeneous IT integration (basically picking parts that work for a particular purpose)

27% - entirely public cloud

24% - entirely private cloud

16% - hybrid cloud

Less than half of enterprises (surveyed) have a mature cloud adoption strategy

12% - self report as mature

37% - self report as somewhat mature

84% 2 of public cloud customers will repatriate some workloads to private infrastructure in 2019

Between 40% and 80% of enterprises will fail to deliver traditional workload on public cloud 3,1

Enterprise Data Centers are closing - incorrect 4

Enterprise Data Center spending continues 5,6

x.86 Market growing at 19% 7

Major x.86 vendors are growing market share and revenue 8

There's a massive misunderstanding about the definition of the Digital Transformation end state. 9

-- Publically available references --

Friday, June 28, 2019

Tuesday, June 25, 2019

The IT Toolbox #002 - Edge and Central

We often hear a reference to the ‘IT Pendulum’ but we should

forget the idea that it is an all or nothing fight between good and evil.

The first point, it’s definitely NOT an all or nothing fight. Each one of these technologies continues to

exist today.

The more interesting thing that happens to make each of

these relevant in their time is the abstraction and evolution pattern that

occurs.

Consider this pattern:

Mainframes becoming remotely administered. Central

-> Edge

Distributed computing replacing Remote Terminal (from

mainframes) Edge -> Edge

Client-Server replacing Distributed Computing Edge

-> Central

Cloud Computing replacing Client-Server Central

-> Central

Distributed Edge replacing Cloud Computing Central

-> Edge

The Pattern consists of computing at the edge or in a

central location. Nothing really magical

about that, but when a NEW technology comes in it’s almost always because we’ve

either abstracted complexity away from the solution OR an evolution step in

capability was enabled.

Take the case of Client-Server replacing Distributed

Computing. The effect was to move

computing to a central location, this made possible largely by a significant increase

in network bandwidth in the mid 1990s. An example of an evolution pattern.

Cloud computing replacing Client-Server happened through an abstraction

pattern. Virtualization of computing

systems allowed substantial recovery of compute investment. It is also making smaller abstractions possible,

think containers and serverless. (also supporting one of my favorite quotes, from Rick

Wilhelm @rickwilhelm, "Containers allow creation and destruction of

application environments without drama or remorse.")

Effectively making the next evolution transition possible,

moving workloads to the Distributed Edge, because … why should a programmer

care where the program runs.

So, the IT Pendulum is only a pendulum if you look at it in

two very myopic dimensions.

The fight between good and evil, it isn't. It's evolution.

Tuesday, June 18, 2019

The IT Toolbox #001 - Definitions

It is vital that IT people communicate with the same

lexicon. This helps to establish

definition which provides the specificity necessary to discuss complex topics.

The marketing engine, not to mention the general media, does

little to correct ambiguity. It can be

argued that ambiguity in the marketing engine suffers ignorance in the hopes of

capturing the next big headline.

This

isn’t new. Key terms do matter. They are refined over time.

What makes matters worse, we often don’t know ~exactly~ what

these terms mean until they are ingrained in a pattern that everyone comes to

accept.

One of the best examples is Cloud Computing, “The Cloud” or

just simply ‘Cloud.’

The problem with ‘Cloud’ is it doesn’t fit the definition of

what everyone believes it to be.

The various definitions I’ve come across include:

Cloud is hosting on the

internet. (True-ish, but not very

meaningful.)

Cloud is Infrastructure as a

Service. (It’s not only, but that’s OK.)

Cloud is Platform as a

Service. (A better definition, but also

incomplete.)

Cloud is Cloud Native applications. (This is about as ill fitting as Infrastructure

as a Service.)

Cloud is Serverless. (No, it’s not. Never was, never will be.)

Cloud is where I’m moving all of our

Enterprise Applications. (That’ll be

fun)

Cloud is Digital. (as in Digital Transformation, everyone talks about it, but few know how to do it.)

Cloud is Digital. (as in Digital Transformation, everyone talks about it, but few know how to do it.)

If you’d like to read a thoughtful description of Cloud, have

a look at this Wikipedia article: https://en.wikipedia.org/wiki/Cloud_computing (16 pages and 125 references, 7 deployment models

and 6 service models AND 23 disambiguation references)

What I’m getting at is that calling anything ‘Cloud’ lacks definition,

its meaning has no precision what-so-ever.

Please make sure others know what you mean, when you say

Cloud.

Monday, May 20, 2019

XXXX-as-a-Service aaS

The context of the utility to an as-a-Service capability in IT relies heavily on where the demarcation of use resolves.

It's much easier to see it in a graphical depiction.

In general, Infrastructure and a portion of the virtualization/abstraction are what will be acted upon by nearly all use cases (if we forgo the licensing issues with respect to those things more appropriately economical on bare metal).

On top of that you'd build out the as-a-Service for Infrastructure, which at the base level is virtualization/abstraction/operating system automation and management.

Then you walk up the technology chain, including the management of constructs necessary for applications to be automated in build / test and delivery.

The last step on the technology chain is the delivery of what should be the most important element (though even today, we've people worried about HOW the underlying physical elements are doing), the Application delivery.

It's much easier to see it in a graphical depiction.

In general, Infrastructure and a portion of the virtualization/abstraction are what will be acted upon by nearly all use cases (if we forgo the licensing issues with respect to those things more appropriately economical on bare metal).

On top of that you'd build out the as-a-Service for Infrastructure, which at the base level is virtualization/abstraction/operating system automation and management.

Then you walk up the technology chain, including the management of constructs necessary for applications to be automated in build / test and delivery.

The last step on the technology chain is the delivery of what should be the most important element (though even today, we've people worried about HOW the underlying physical elements are doing), the Application delivery.

|

| Tiers of 'as-a-Service' delivery |

Friday, May 10, 2019

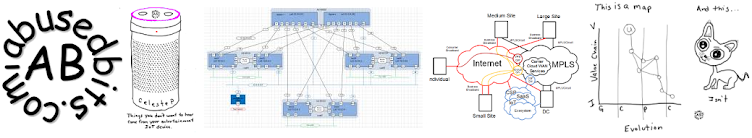

The Internet meme vs Cynefin

Stumbling across an interesting relationship can be interesting. Was looking at a blog by Kees van der Ent on linked.in and realized that the header graphic could be related to Cynefin.

Thanks to Dawn ONeil presentation on checkup.org.au, I was rapidly able to mock up the concept, meme to the Cynefin graphic, and it became apparent that there is a relationship.

In Cynefin, Complex Collaboration is all about "putting thoughts into words." It has to be described to work with complexity. Consider how absolutely wide the fields of Information Technology have become, things as simple as definitions and TLAs may not mean the same things to different technology backgrounds.

Without this specific step in the framework, it's is nearly impossible except through experimentation to achieve anything remotely close to education.

This lends itself heavily to cooperation, taking complex concepts and reducing them to something relatable. Quite literally "what I say to other people."

Reducing disorder even further, is were understanding really takes hold. Concepts are extracted, reduced and put in overlay or contrast in such at way that it becomes "what people actually understand."

Thanks to Dawn ONeil presentation on checkup.org.au, I was rapidly able to mock up the concept, meme to the Cynefin graphic, and it became apparent that there is a relationship.

In Cynefin, Complex Collaboration is all about "putting thoughts into words." It has to be described to work with complexity. Consider how absolutely wide the fields of Information Technology have become, things as simple as definitions and TLAs may not mean the same things to different technology backgrounds.

Without this specific step in the framework, it's is nearly impossible except through experimentation to achieve anything remotely close to education.

This lends itself heavily to cooperation, taking complex concepts and reducing them to something relatable. Quite literally "what I say to other people."

Reducing disorder even further, is were understanding really takes hold. Concepts are extracted, reduced and put in overlay or contrast in such at way that it becomes "what people actually understand."

Subscribe to:

Posts (Atom)