It is intended to be the start of a conversation, not the end of one.

Software from a hardware perspective has a more complex value chain with respect to the hardware. While we could argue the position, the relative perspective can be used to illustrate the mechanics that have driven software and subsystems toward commodity.

The argument I'm making is that hardware from the classical/traditional sense of computing is procedurally being decoupled from the execution of the application. To be sure, we saw some of this with the introduction of virtualization, but it wasn't so much decoupled as pushed so far down the value chain that application architects simply quit thinking of hardware.

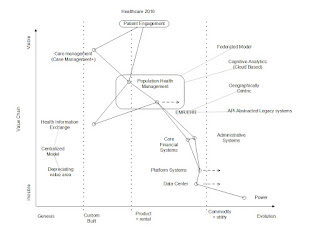

To understand how we get to that state, we should probably start with what has happened to hardware. A high level depiction provided in Figure 1:

|

| Figure 1. Value Chain Evolution of Hardware in Computing |

Starting with mechanical computing in the Genesis (lower left) and showing each high level hardware change, like IDC's representation of 1st Platform in the range of Mainframe to "still" Mini Computer, 2nd Platform from Micro Computer to Server Virtualization and 3rd Platform with Cloud Computing and upward with outliers just kicking off in IoT and Private Computing.

|

| Figure 2. Value Chain of Software Evolution in Computing |

Software from a hardware perspective has a more complex value chain with respect to the hardware. While we could argue the position, the relative perspective can be used to illustrate the mechanics that have driven software and subsystems toward commodity.

A case in the argument being, the use of Operating Systems need to be product, providing ease of use, but input to something like computer Languages has to be custom because of the deep learning required to learn and master.

This being as much a case of cost of the output of the products as the education needed to create the products and make them useful/use-able.

Ultimately there's something unique happening today that is not necessarily the easiest thing to describe. Its called #serverless.

We've done things like this before with portability of languages like Java, where the execution method was abstracted away from the hardware sufficiently to run it on multiple hardware devices.

We've done things like this before with portability of languages like Java, where the execution method was abstracted away from the hardware sufficiently to run it on multiple hardware devices.

Today we're thinking about a more absolute abstraction of the hardware. This will make it possible to decouple multiple and complex languages, libraries and applications from the hardware.

To show where and how this is happening, I'm overlaying these two value chains. Apologies if I've just offended the #Mapping #Gods.

|

| Figure 3. Combined Hardware - Software |

Upper right hand corner holds the primary elements to decoupling hardware from the software. Hardware is still there, but is only important to those that have to care about it.

The feedback loop of Agile Development with "Loosely Coupled, Message Event driven and Backflow tuning of the software plays a critical role in reaching the state of the Decoupled Application Function. Quite a lot of this is to make the application more aware of itself.

In the hardware area, some element of containerization (capture of all relevant parts of the execution of function of an application or sub application) moves the awareness of the application design away from the hardware to the operating system (arguably another abstraction of the function of the hardware).

Containerization provides the means to swiftly move the application or subcomponents from one hardware underlay to another (think one server to another or one cloud to another).

Ultimately this makes it possible to create components of applications that can become highly standardized or industrialized. Something we might call #microservices.

These components being decoupled, can be called upon independently by API. Sufficiently optimized, they can be spun up and down on demand for the execution of a particular function.

The application could simply become a cascade of API calls between decoupled application functions to create an output.

The application could simply become a cascade of API calls between decoupled application functions to create an output.

I believe the next trick is going to be related back to a platform mechanism. We're going to need a schematic or blueprint to create / modify the application. Something that can understand all of the sub-component elements and provide the messaging and feedback necessary to execute more complex arrangements of applications.

A platform that does Application Chaining.

This platform could also abstract away the complexity in creation of an application. With the most simplified models providing a GRAPHICAL interface to the creation of an application.

Also, the sensors that this would provide would be incredibly valuable to the operator of the platform. It would provide advanced knowledge of new methodologies of applications and sub-components.